AI This Week

Interesting papers published recently and more.

👋 Hey there! Welcome to fastpapers, a weekly newsletter about the latest developments in artificial intelligence. It summarizes the best of the week's papers, news and a few fun links.

In this week's issue:

1. Summaries of Research papers

ImageNet-X: Understanding Model Mistakes with Factor of Variation Annotations

This paper introduces ImageNet-X, a set of sixteen human annotations of factors such as pose, background, or lighting the entire ImageNet-1k validation set as well as a random subset of 12k training images. The paper investigates 2,200 current recognition models and study the types of mistakes as a function of model's (1) architecture, e.g. transformer vs. convolutional, (2) learning paradigm, e.g. supervised vs. self-supervised, and (3) training procedures, e.g., data augmentation.

TextCraft: Zero-Shot Generation of High-Fidelity and Diverse Shapes from Text

This paper introduces TextCraft, a method for generating high-fidelity and diverse 3D shapes without the need for (text, shape) pairs for training. TextCraft uses CLIP and a multi-resolution approach to improve the fidelity of the generated shape. To improve shape diversity, TextCraft uses a discrete latent space which is modeled using a bidirectional transformer. TextCraft also uses a novel variant of classifier-free guidance to further improve the accuracy-diversity trade-off.

Don't Prompt, Search! Mining-based Zero-Shot Learning with Language Models

This paper proposes an alternative mining-based approach for zero-shot learning that is more flexible and interpretable than prompting. This method outperforms prompting on a wide range of tasks when using comparable templates.

Large Language Models Are Human-Level Prompt Engineers

This paper presents Automatic Prompt Engineer (APE), a method for automatically generating and selecting natural language instructions for large language models (LLMs). APE treats the instruction as the "program," optimized by searching over a pool of instruction candidates proposed by an LLM in order to maximize a chosen score function. Experiments on 24 NLP tasks show that APE-engineered prompts outperform the prior LLM baseline by a large margin and achieve better or comparable performance to the instructions generated by human annotators on 19/24 tasks.

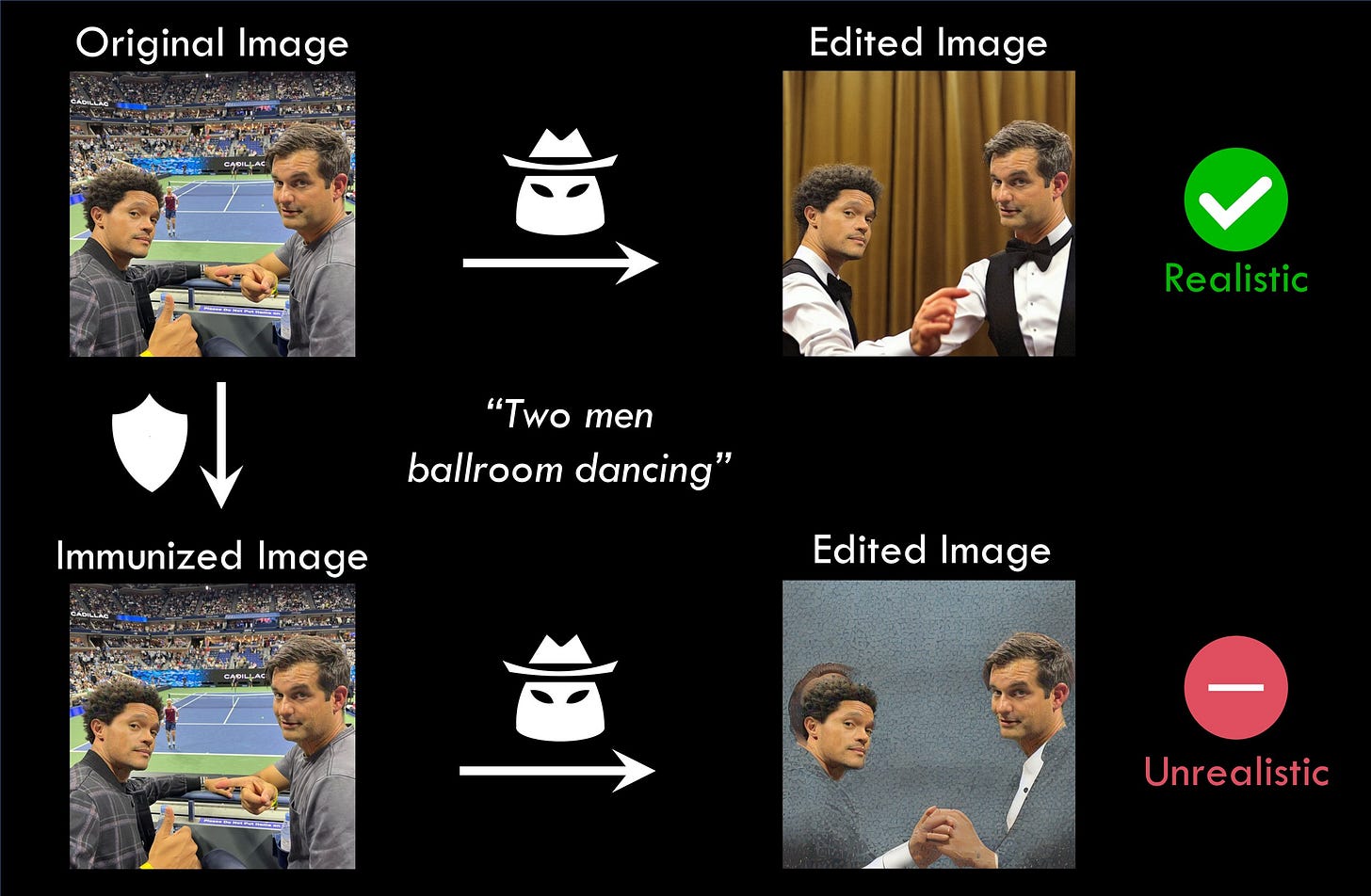

Rickrolling the Artist: Injecting Invisible Backdoors into Text-Guided Image Generation Models

This paper discusses backdoor attacks against text-guided generative models. These attacks exploit the fact that many text-guided image generation models rely on pre-trained text encoders from external sources. By slightly altering an encoder, an attacker can trigger the model to generate images with pre-defined attributes or images following a hidden, potentially malicious description. The paper demonstrates the high effectiveness of these attacks and highlights that the injection process of a single backdoor takes less than two minutes.

Logits are predictive of network type

It is possible to predict which deep network has generated a given logit vector with accuracy well above chance. A classifier is trained on the logit vectors of the trained set of a dataset to map the logit vector to the network index that has generated it. Results are better with randomly initialized networks, but also generalize to pretrained networks as well as fine-tuned ones. Classification accuracy is higher using unnormalized logits than normalized ones.

Robustness of Fusion-based Multimodal Classifiers to Cross-Modal Content Dilutions

This paper investigates the robustness of multimodal classifiers to cross-modal dilutions. The authors develop a model that generates additional dilution text that leads to misclassification of the multimodal input. Experiments on two tasks show that the performance of task-specific fusion-based multimodal classifiers drops significantly in the presence of dilutions generated by the model.

Evaluating and Improving Factuality in Multimodal Abstractive Summarization

This paper proposes a new metric, CLIPBERTScore, for evaluating the factuality of abstractive document summarization, which takes into account the vision modality. This metric is a combination of CLIPScore and BERTScore, and is designed to leverage the robustness and strong factuality detection performance between image-summary and document-summary. The paper shows that this new metric outperforms existing factuality metrics for document summarization, and performs competitively with strong multimodal factuality metrics specifically fine-tuned for the task.

The Path to Autonomous Learners

This paper presents a new theoretical approach for enabling domain knowledge acquisition by intelligent systems. A hybrid model is introduced that starts with minimal input knowledge in the form of an upper ontology of concepts, stores and reasons over this knowledge through a knowledge graph database and learns new information through a Logic Neural Network. The behavior of this architecture when handling new data is studied and it is shown that the final system is capable of enriching its current knowledge as well as extending it to new domains.

2. Noteworthy open-source & datasets

Open-Vocabulary Semantic Segmentation with Mask-adapted CLIP

Focal Modulation Networks: an attention-free architecture that achieves superior performance than SoTA self-attention

Warp: Python framework for writing high-performance simulation and graphics code

The stack: contains over 3TB of permissively-licensed source code files covering 30 programming languages crawled from GitHub

ImageNet-X is a set of human annotations pinpointing failure types for the popular ImageNet dataset

3. ICYMI interviews

OpenAI's Greg Brockman: The Future of LLMs, Foundation & Generative Models (DALL·E 2 & GPT-3)

Codex demo: solving complex problems with multiple iterations (minute ~4:30)

4. From around the web

Thank you for reading this week's newsletter! I hope you found it useful and that you learned something new. I'd love to know if you have feedback on any topic about which you'd like me to write more about or you just like to share some thoughts.